Standards and Assurance Framework for Ethical AI (SAFE AI) Project

AI has the ability to accelerate safe innovation and change humanitarian action for the better. Yet, there is a real risk of an underfunded, overstretched humanitarian sector accelerating towards unsafe use of AI to reduce costs with serious unintended consequences for vulnerable populations.

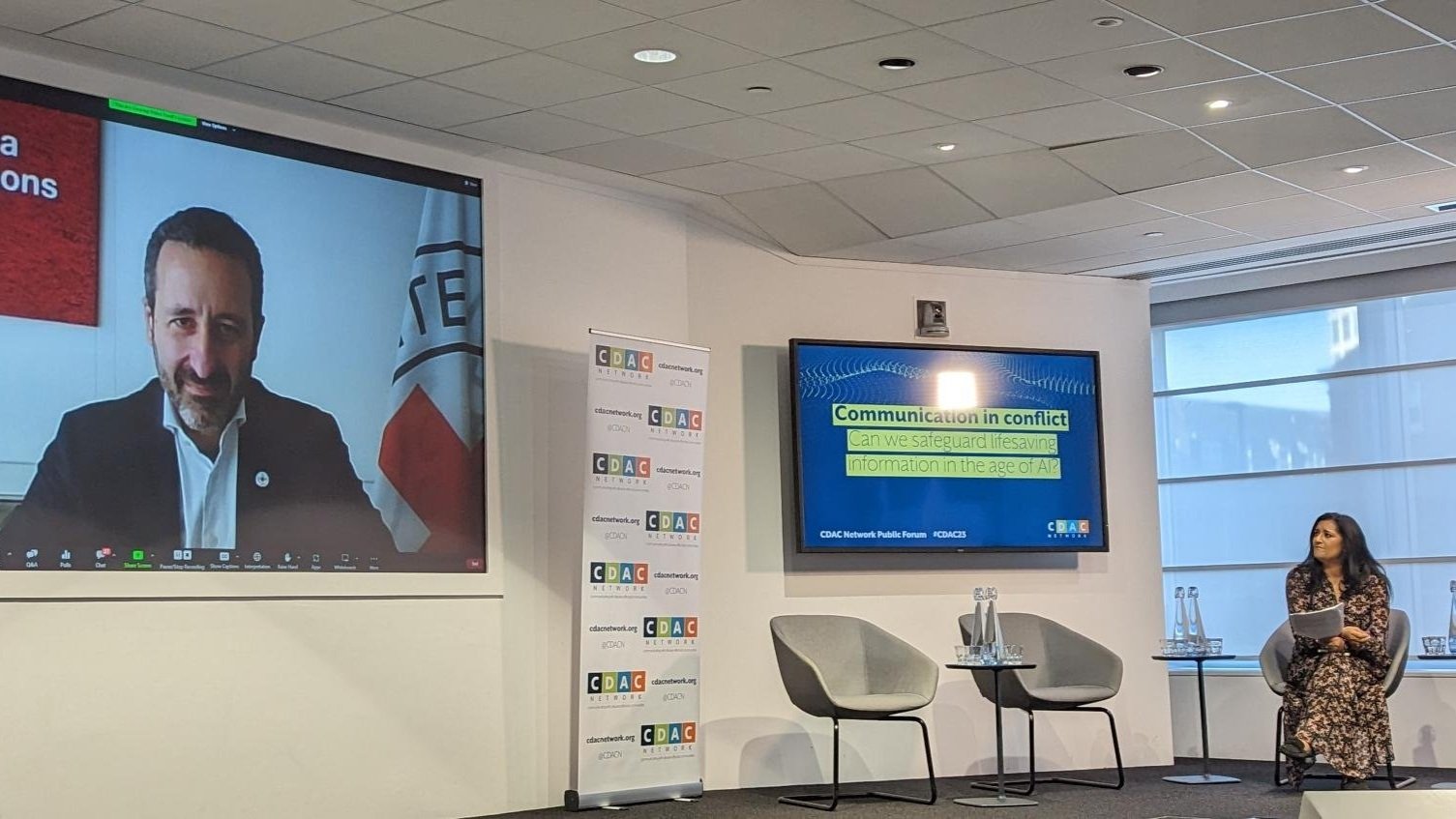

CDAC Network, The Alan Turing Institute and Humanitarian AI Advisory have partnered to launch the SAFE AI project: Standards and Assurance Framework for Ethical Artificial Intelligence. This initative, funded by the UK Foreign, Commonwealth & Development Office (FCDO), will develop a practical and useable foundational framework for enabling responsible AI in humanitarian action.

We're creating practical AI compliance and regulation guidelines, developing AI technological assurance tools to check if AI systems are fair and trustworthy, ensuring affected communities can participate (community-in-the-loop) and have a real say in how AI is used, and engaging with humanitarian organisations to build solutions that address their actual needs.

We are in beta phase!

The SAFE AI project is currently in beta phase. We are putting the framework to the test in real world, practical situations ahead of the final publication in 2026. We want to hear from you! If you have any feedback or insights on any of the beta products, email info@cdacnetwork.org or fill in this feedback survey.

Project focus

-

AI Compliance and Regulation

The framework will increase the humanitarian sector's understanding of AI regulatory standards and compliance benefits. We will create clear guidelines for humanitarian contexts, which align with global best practices.

-

AI Technological Assurance

Providing practical tools and approaches to evaluate, validate and improve AI systems. Our framework will help humanitarian organisations assess AI models for fairness, reliability and trustworthiness—building confidence for responsible AI adoption across the sector.

-

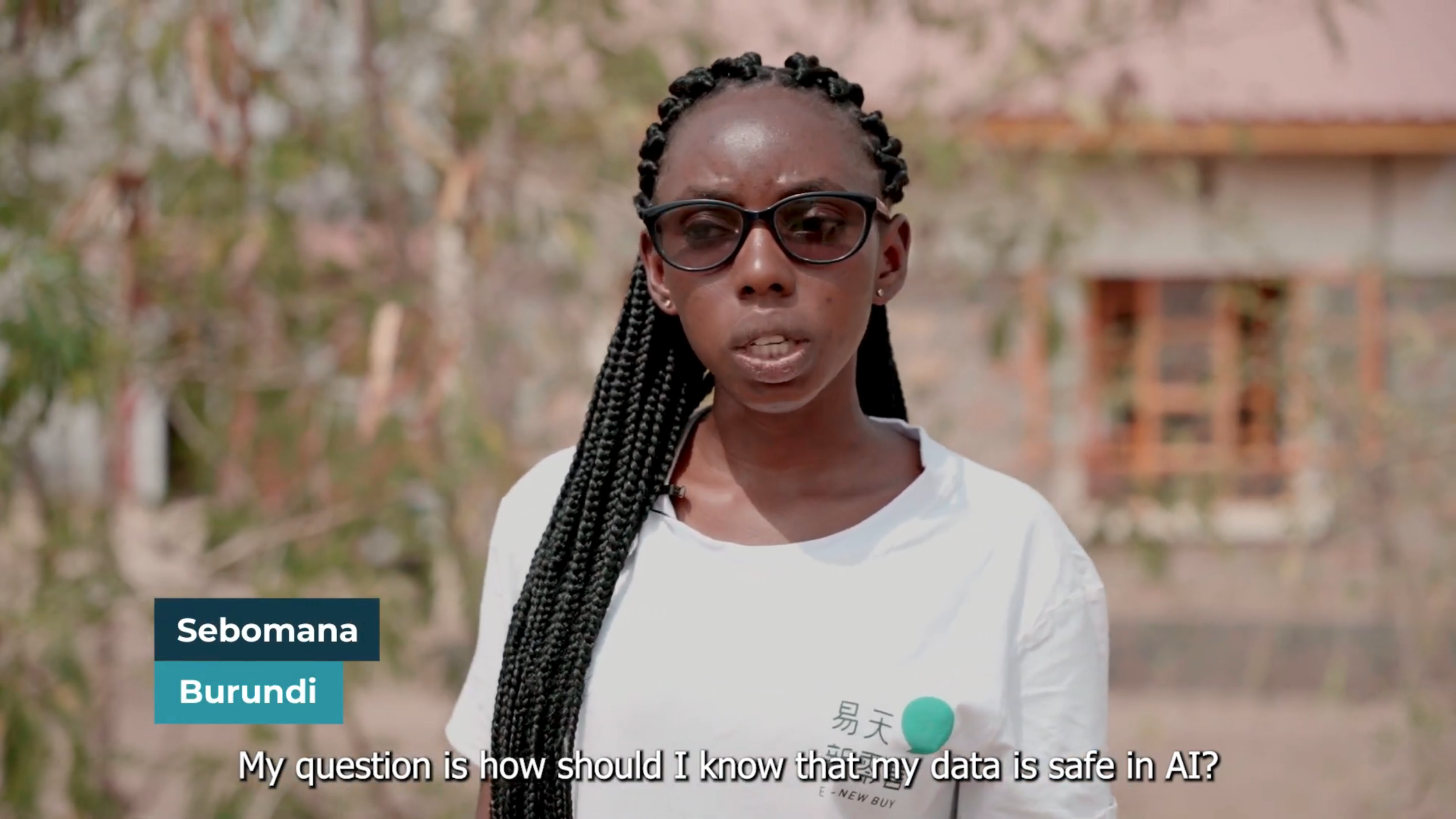

Community Participation

The framework will provide a participatory AI playbook with methods and guidance for meaningful engagement with crisis-affected communities. We want to make sure communities are in the loop and have a voice in how AI technologies are implemented in humanitarian contexts.

-

Humanitarian Engagement

Humanitarian organisations will be directly involved in the creation, testing and refinement of the SAFE AI framework. This collaborative approach ensures our solutions address real-world challenges faced by frontline responders.

Meet the Team

-

Anjali Mazumder

Research Director, AI, Accountability, Inclusion & Rights, The Alan Turing Institute

-

Helen McElhinney

Executive Director & SAFE AI Lead, CDAC Network

-

Michael Tjalve

Founder, Humanitarian AI Advisory

-

Suzy Madigan

Participation and AI Lead, CDAC Network

Insights

Get in touch.

We want to hear your ideas - if you want to be involved, have a project or research we should know about, feedback or tips, please either contact us via the online form or at info@cdacnetwork.org.

This project has been funded by UK International Development from the UK government.